Photogrammetry

The physical objects were chosen before the dramaturgy process for their aesthetic value, period appropriateness and logistical size considerations. Photogrammetry proofs were initiated early in the project to validate its capabilities for object creation as well as the quality of its realistic results. Simple testing was done with a small object as its details and wear as well as mono colour tone seemed to be suitable for assessing several factors like photography quality, number of images to use, how to capture small details, and which photogrammetry software was best suited for the results we wanted to achieve. Thirty-six images were captured using a Canon T5i Rebel DSLR in RAW format on a cloudy day in October 2018 circling the object to take shots from two angles as well as close-ups for detail processing. The object itself was not moved and the time of day, an early afternoon, and location with diffuse northern light and a textured concrete patio, were chosen to flat light the subject and minimize shadows.

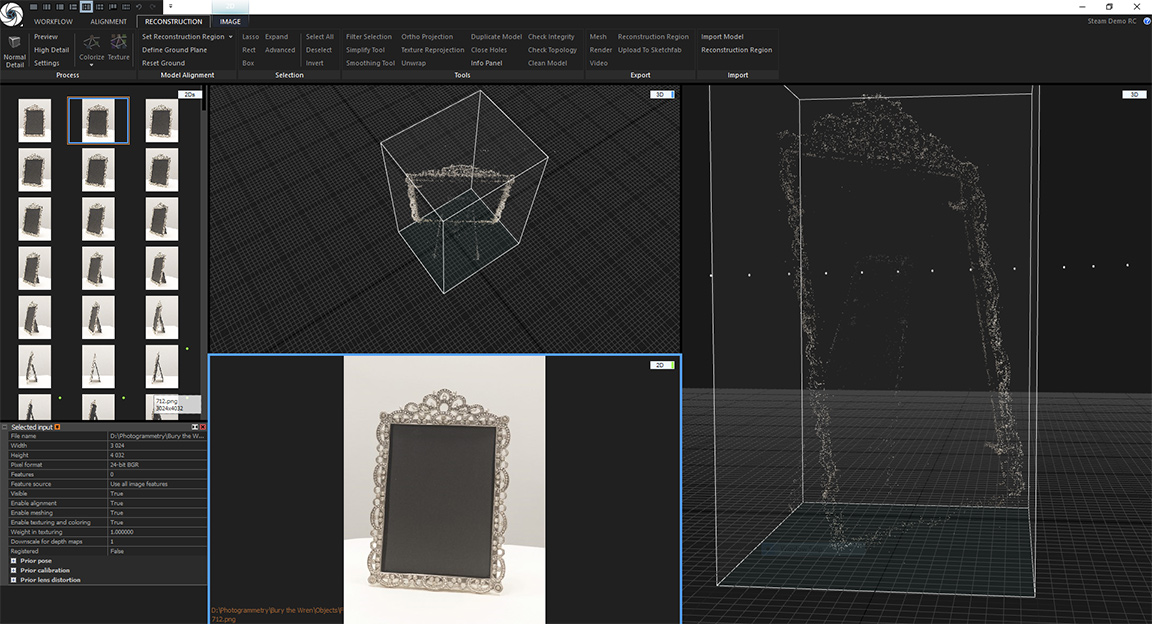

Three photogrammetry software packages were selected for processing the test images and comparing the results. After several test runs with each software suite tweaking parameters and discovering what effected point cloud accuracy and texture quality the most, RealityCapture became the clear choice. Even on the small sample of images tested, RealityCapture produced an effective replication of the photographed object with geometry clean-up and texture tweaking required but most of the mesh generated and small details retained.

Photogrammetry software works by virtually replicating the positions of the camera from each photograph angle taken, re-projecting each image from those disparate camera positions and then constructing a three-dimensional re-creation of the object from all the overlapping image and position information combined. In order to create the most detailed reproductions in 3D, once the camera positions are defined by aligning the images around the object that was photographed, a dense point cloud is created describing the object in X, Y and Z coordinate space. The point cloud is then referenced to create a high-density polygonal mesh and depending on the level of detail usually runs in the five to ten million polygon count. Factors like shadows, contrast and easily definable features impact the quality of the point cloud and mesh generation as well as the number of photographs used to align the resulting virtual camera array. More images don’t always result in a more accurate or better photogrammetry scan. Often the overlap of the images combined with differing angles of capture have the most to do with creating a reasonable to exceptional recreation in 3D. Using more images also increases the amount of processing required, a large consideration in the software chosen, as depending on its algorithm minutes of calculation can easily turn into multiple hours every time a parameter needs to be modified.

The type of physical characteristics of objects to be scanned using photogrammetry is also an important consideration. Each of the five objects we chose to scan had their own issues and as naïve photogrammetric processors, many mistakes were made that led to incomplete mesh generation and a lack of detail in parts of the geometry requiring a considerable amount of manual adjustments in other 3D modelling and texturing applications. Surveying photogrammetry documentation and tutorials often the physical traits of the type of object to be scanned is neglected or assumed. Considering the importance in the process, it should be highlighted as a crucial factor in choosing objects to scan.